At Google’s I/O developer conference, CEO

Sundar Pichai announced a new technology called Google Lens. The idea

with the product is to leverage Google’s computer vision and AI

technology in order to bring smarts directly to your phone’s camera. As

the company explains, the smartphone camera won’t just see what you see, but will also understand what you see to help you take action.

During a demo, Google showed off how you could point your camera at

something and Lens tells you what it is — like, it could identify the

flower you’re preparing to shoot.

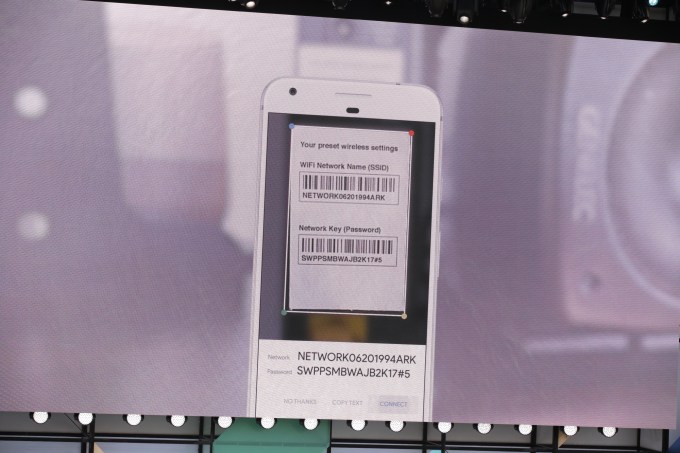

In another example, Pichai showed how Lens could do a common task — connecting you to a home’s Wi-Fi network by snapping a photo of the sticker on the router.

In that case, Google Lens could identify that it’s looking at a network’s name and password, then offer you the option to tap a button and connect automatically.

A third example was a photo of a business’s storefront — and Google Lens could pull up the name, rating and other business listing information in a card that appeared over the photo.

The technology basically turns the camera from a passive tool that’s capturing the world around you to one that’s allowing you to interact with what’s in your camera’s viewfinder.

Later, during a Google Home demonstration, the company showed how Lens would be integrated into Google Assistant. Through a new button in the Assistant app, users will be able to launch Lens and insert a photo into the conversation with the Assistant, where it can process the data the photo contains.

To show how this could work, Google’s Scott Huffman holds his camera up to a concert marquee for a Stone Foxes show and Google Assistant pulls up info on ticket sales. “Add this to my calendar,” he says — and it does.

The integration of Lens into Assistant can also help with translations.

In addition, Pichai showed how Google’s algorithms could more generally clean up and enhance photos — like when you’re taking a picture of your child’s baseball game through a chain-link fence, Google could remove the fence from the photo automatically. Or if you took a photo in a low-light condition, Google could automatically enhance the photo to make it less pixelated and blurry.

The company didn’t announce when Google Lens would be available, only saying that it’s arriving “soon.”

No comments:

Post a Comment